For a more

comprehensive summary of my work,

please see my author profile on inspire.

A selection of slides from research talks can also be found below.

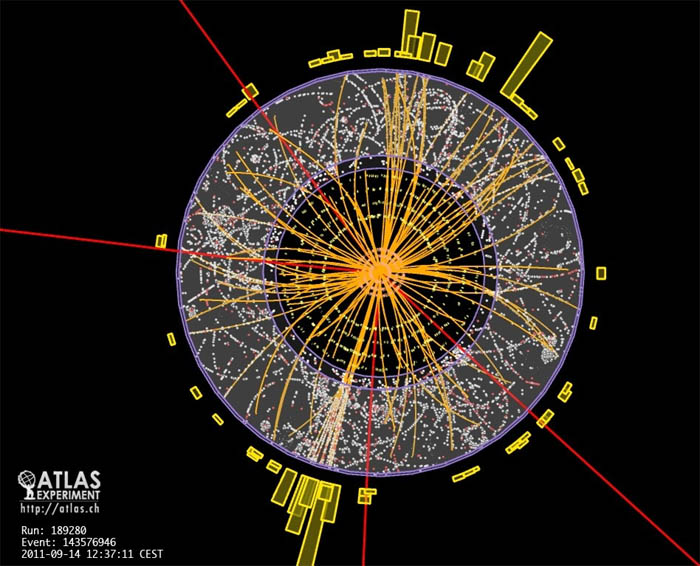

The Large Hadron Collider has recently resumed operation at the unprecedented collision energy $\sqrt{s} = 13 \text{ TeV}$. As the first wave of data continues to be collected and analyzed, there is a tremendous amount of exciting work to be done at the interface between theory and experiment.

For example, there are novel signatures that might be missed using the current suite of searches. If dark matter particles are produced such that they are aligned with a jet of visible hadronic activity, then it is possible they would be vetoed by the angular $\Delta \phi$ cuts between the missing energy and the jets currently in use by the LHC collaborations. This was explored in the paper Semi-visible Jets by TC, Mariangela Lisanti, and Hou Koung Lou [arXiv:1503.00009].

Another class of signatures that are difficult to distinguish from background could result from new particles that are produced and then decay to a high multiplicity hadronic final states with little or no missing energy. One approach that has been useful for searching for these signals is by clustering them into so-called "fat jets" with large radius $R\simeq 1$. Taking advantage of the difference in the internal structure of these fat jets for signal versus the enormous QCD background allows for dramatic gains in sensitivity. We designed such a search in the paper Jet Substructure by Accident by TC, Eder Izaguirre, Mariangela Lisanti, and Hou Keong Lou [arXiv:1212.1456]. We also developed techniques which allow a novel data-driven background determination in the paper Jet Substructure Templates by TC, Martin Jankowiak, Mariangela Lisanti, Hou Keong Lou, and Jay G. Wacker [arXiv:1402.0516].

Observations of the comic microwave background, galactic rotation curves, and merging galaxy clusters (such as the bullet cluster) provide overwhelming observational evidence for the existence of an enormous abundance of a new stable (or very long lived) neutral particle, referred to as dark matter. Precision measurements of the cosmic microwave background can be used to infer that dark matter constitutes around 27% of the total energy budget in our Universe — that is about five times more than the abundance of stuff that is described by the Standard Model of particle physics! Understanding how dark matter talks to the Standard Model, and what its properties are (or at least constraining what they are not) is of paramount importance to our mission of understanding the microscopic properties of nature.

In our recent paper Gamma-ray Constraints on Decaying Dark Matter and Implications for IceCube by TC, Kohta Murase, Nicholas L. Rodd, Benjamin R. Safdi, and Yotam Soreq [arXiv:1612.05638], we provided updated constraints on scenarios where the dark matter is an unstable particle with a very long lifetime. If the dark matter had a mass of $m_\chi \sim 10 \text{ PeV}$, and decayed with a characteristic time scale of $\tau \sim 10^{27} \text{ secs}$, then it could lead to observable signatures in both gamma ray experiments such as the Fermi space telescope, and in neutrino experiments such as IceCube. In particular, the observation of neutrinos with PeV energies could have been the signature of decaying dark matter. Our work provides a novel analysis of the Fermi data, and demonstrates that for the majority of possible Standard Model final states, the decaying dark matter interpretation of the IceCube data is incompatible with the gamma ray observations. The conclusion is that the high energy neutrino data is most likely explained by an astrophysical source.

We are also interested in more theoretical topics, such as the application of effective field theory techniques to dark matter processes. One example of this kind of work is the paper Soft Collinear Effective Theory for Heavy WIMP Annihilation by Martin Bauer, TC, Richard J. Hill, Mikhail P. Solon [arXiv:1409.7392]. In this paper, we provide the first application of the soft collinear effective theory to the case of a heavy weakly interacting dark matter candidate $\chi$ annihilating to photons $\gamma$ in the Universe today. Effective field theory approaches are required when there is a large separation of scales that lead to large logarithms, which can spoil the convergence of perturbation theory. In our case, these are so-called Sudakov logs $\sim \alpha_W\,\text{log}^2 m_\text{DM}/m_W$, where $\alpha_W$ is the weak coupling constant, $m_\text{DM}$ is the dark matter mass, and $m_W$ is the mass of the $W$-boson. Our paper demonstrated the correct effective description of the process $\chi\,\chi \rightarrow \gamma \,\gamma$. We could then resum the associated logarithms, and match onto a non-relativistic description, which allowed us to incorporate an important long-distance effect known as the Sommerfeld enhancement.

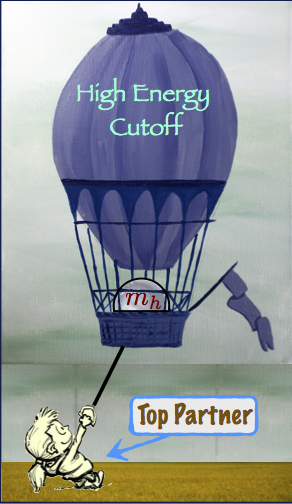

In the absence of a protection mechanism (usually due to the presence of a symmetry), quantum mechanical effects tend to drive all dimensionful parameters to be proportional to the largest energy scale in the theory. This issue is particularly acute in the Standard Model, since there is no a priori symmetry that would protect the mass parameter for the Higgs boson $m_H^2$. The electroweak naturalness problem can then be states as an attempt to understand the very small ratio $m_H^2/m_\text{pl}^2 \sim 10^{-32}$, where $m_\text{pl}\sim 10^{18} \text{ GeV}$ is the scale associated with strong gravitational effects. The simplest approach to explaining this so-called hierarchy problem is to invoke either ''supersymmetry'' or a ''global symmetry'' (which could be the result of new strong dynamics). This is the motivation for the wide variety of searches that are on-going at the LHC. Specifically, supersymmetry requires the addition of new top-partner states who act to protect the Higgs mass parameter from the dangerous quantum corrections. The so-far non-observation of these superpartners motivates work on less standard solutions to the electroweak naturalness question.

This was the point of view taken in the paper $N$naturalness by Nima Arkani-Hamed, TC, Raffaele D'Agnolo, Anson Hook, Hyung Do Kim, and David Pinner [arXiv:1607.06821], where we solved the hierarchy problem in a novel way by relying on the cosmological history of the Universe. We introduce a huge number of new independent copies of the Standard Model particles, counted by $N$, which can be taken as high as $10^{16}$. The Higgs mass parameter is assumed to take an independent random value from $-m_\text{pl}^2 \lesssim m_H^2 \lesssim m_\text{pl}^2$ within each of these new copies. Then there will exist a copy with the smallest negative parameter value, with $\big|m_H\big| \ll m_\text{pl}$. Finally, the mechanism developed in our paper shows how to dynamically achieve a situation where the majority of the energy density is contained in one special sector, which reproduces a Universe like the one we occupy today. The punchline is that the main observations which can be used to constrain the $N$naturalness scenario are cosmological in origin. The Standard Model could appear fine-tuned using only collider experiments, but by studying the cosmic microwave background, the details of big bang nucleosynthesis, and the large scale structure of galaxies, we could get clues into the nature of naturalness.

Staying with the theme of non-standard approaches to naturalness, we worked on understanding and extending the parameter space of a model of "neutral naturalness." This is the broad class of theories where the top-partners do not carry the same Standard Model charges as the top quarks. While it does require some additional model building overhead, the signatures associated with naturalness are dramatically different. Our paper, Folded Supersymmetry with a Twist by TC, Nathaniel Craig, Hou Koung Lou, and David Pinner [arXiv:1508.05396], provided an extension of the original model in the form of a Scherk-Schwarz twist. This allowed us to maintain the calculability of the original theory, and provided enough handles to be sure that electroweak symmetry was broken, and that the low energy theory reproduced the physics of the Standard Model.